OpenFL: an open source

framework for Federated Learning

Open Federated Learning (OpenFL) is a Python-based Federated Learning Framework that enables organizations to train and validate machine learning models on sensitive data. It increases privacy by allowing collaborative model training or validation across local private datasets without ever sharing that data with a central server.

OpenFL enables access to large and more diverse datasets. This helps improve accuracy and increase the generalizability of AI models while complying with privacy regulations in various domains such as healthcare and financial services.

OpenFL was used in one of the world’s largest healthcare federations for training and validating brain tumor segmentation models across 71 sites in 6 continents. The federation resulted in improved model accuracy even on out-of-sample data.

User Experience

Supports popular DL models

High level API / Low level API

Use GPU, CPU, or other accelerators

Privacy & Security

Mutual TLS

Trusted execution environments

Minimizes open ports

Privacy preserving algorithms

Scalability

Grow from a simulation to a large federation

Efficient compression methods

About OpenFL

OpenFL was originally developed by Intel. In January 2023, Intel, VMware, University of Pennsylvania, and Flower Labs established OpenFL under The Linux Foundation’ s LF AI and Data project.

- Framework agnostic: Supports deep learning frameworks like PyTorch, Tensorflow, JAX and FLAX, and is extensible to other machine learning libraries.

- Secure and privacy preserving: Supports a range of threat and trust scenarios in a federation. It supports hardware-based trusted execution environments (TEEs) and offers software-based methods such as differential privacy. This enables protecting model IP, mitigates attacks to a federated learning system, and preserves data privacy.

- Flexible and easy to use: Data scientists can easily adapt their existing code to allow federation and allows developers to develop custom workflows via a Metaflow inspired interface.

- Scalable: Start with simulation and grow to a large federation.

OpenFL was originally developed by Intel. In January 2023, Intel, VMware, University of Pennsylvania, and Flower Labs established OpenFL under The Linux Foundation’ s LF AI and Data project

Get Involved

Projects / Use Cases

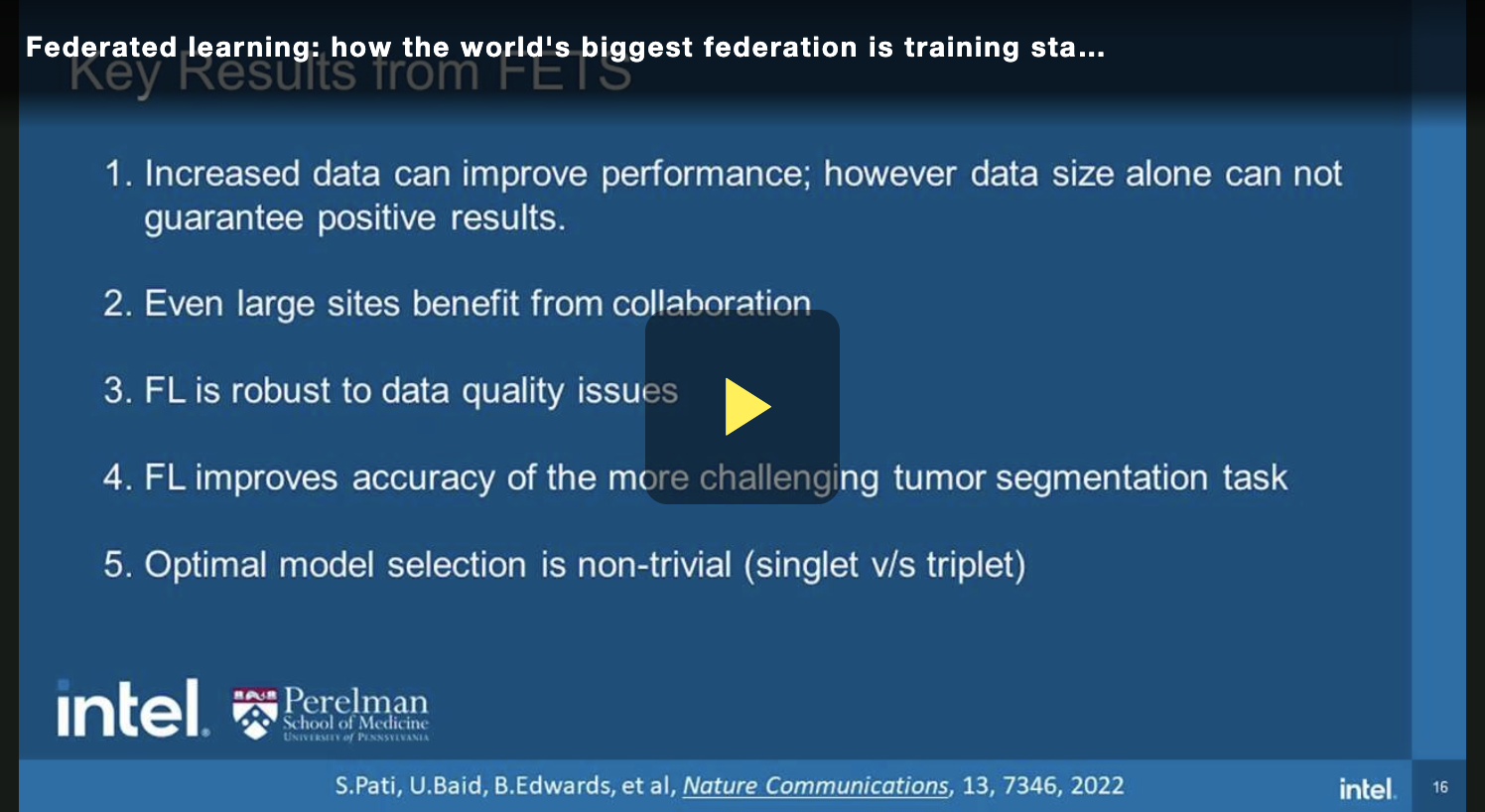

The Federated Tumor Segmentation (FeTS) initiative, describes the on-going development of i) the largest international federation of healthcare institutions, and ii) an open-source toolkit with a user-friendly GUI, aiming at gaining knowledge for tumor boundary detection from ample and diverse patient populations without sharing any patient data.

FeTS 2022 focuses on benchmarking methods for federated learning (FL), and particularly weight aggregation methods for federated training, and algorithmic generalizability on out-of-sample data based on federated evaluation. FeTS 2022 targets the task of brain tumor segmentation.

This summer, Frontier Development Lab researchers conducted a landmark astronaut health study with Intel AI Mentors to better understand the physiological effects of radiation exposure on astronauts.

Publications

Physics in Medicine and Biology

Federated learning (FL) is a computational paradigm that enables organizations to collaborate on machine learning (ML) and deep learning (DL) projects without sharing sensitive data, such as patient records, financial data, or classified secrets.

Nature Communications

Although machine learning (ML) has shown promise across disciplines, out-of-sample generalizability is concerning. This is currently addressed by sharing multi-site data, but such centralization is challenging/infeasible to scale due to various limitations. Federated ML (FL) provides an alternative paradigm for accurate and generalizable ML, by only sharing numerical model updates.

Nature.com

Federated learning is a novel paradigm for data-private multi-institutional collaborations, where model-learning leverages all available data without sharing data between institutions, by distributing the model-training to the data-owners and aggregating their results.